Frameworks

Running SOL in a framework is the easiest operation mode, as SOL takes care of most parameters, options, etc. automatically. In principle you just need to load SOL and run the sol.optimize(model, args, kwargs={}, *, framwork=None, vdims=None, **fwargs) function on your target model.

See subchapters for details on the different frameworks and example codes.

| Parameter | Description |

|---|---|

| model | Your model you want to optimize using SOL. |

| args | Either a list or tuple of framework tensors or other inputs. |

| kwargs | A dictionary of named arguments. |

| framework | A framework in which the returned model shall be executed. By default the same as the input model. |

| vdims | A list or tuple containing the value you want to assign to the variable dimension. Valid values are positive integers or None for variable values. |

| fwargs | A dictionary containing framework specific flags. See corresponding framework for available flags. |

Generic Model Functions

SOL models are implemented using the framework’s own model structure, so they provide the same functionality as the framework’s models, except that SOL models always assume, that training = False for inference and training = True for training runs. Additionally they support following functions:

| Command | Description |

|---|---|

model.sol_network() |

return the hash of the network |

| Command | Description |

|---|---|

sol.check_version() |

Checks if a new version of SOL is available |

sol.config["..."] = ... |

Sets config options |

sol.config.print() |

Prints all config options and their current values |

sol.optimize(...) |

Details here |

sol.deploy(...) |

Details here |

sol.cache.clear() |

Clears Sol’s build cache, to enforce rebuild of models. |

sol.device.enable(device) |

Enables code generation for the specified device. By default all available devices will be build. Device needs to be sol.device.[x86, nvidia, ve] |

sol.device.disable(device) |

See sol.device.enable(device) |

sol.device.set(device, deviceIdx) |

Forces Sol to run everything on the given device. If the data is not located on the target device, it will be explicitly copied between the host and the device. |

sol.__version__ |

SOL version string |

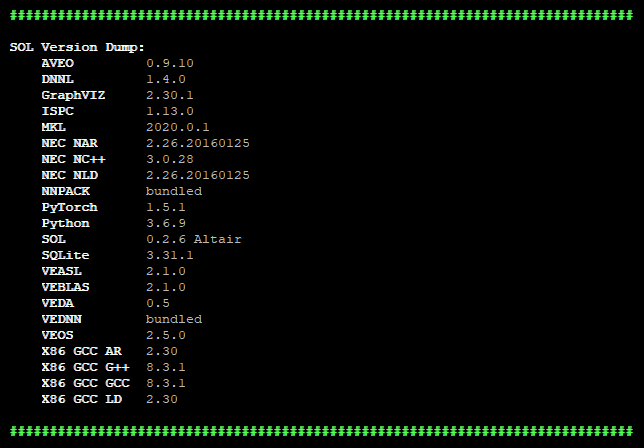

sol.versions() |

Prints versions of used compiler and libraries.  |

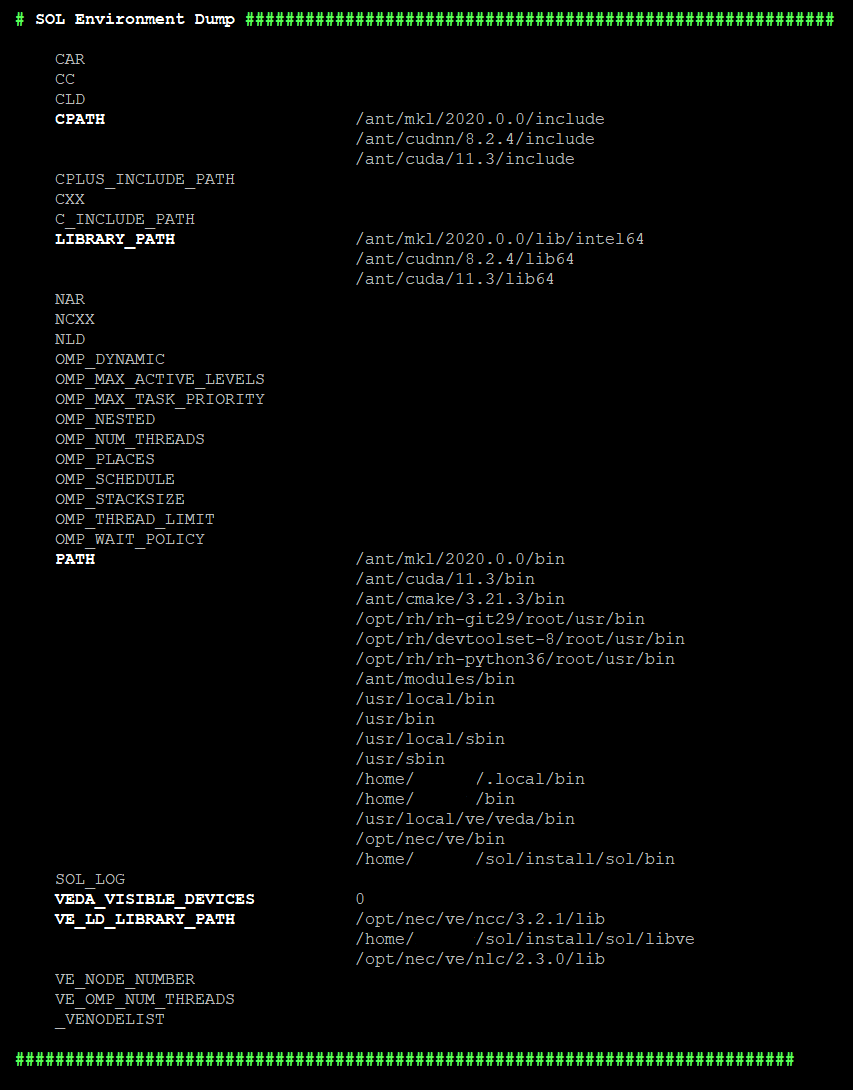

sol.env() |

Prints Env Vars + values used by SOL.  |

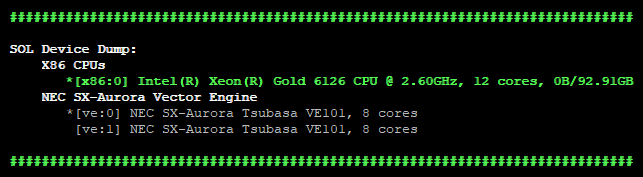

sol.devices() |

Prints overview of available devices.  Green: device is initialized (has been used for computations). Star: default device. Green: device is initialized (has been used for computations). Star: default device. |

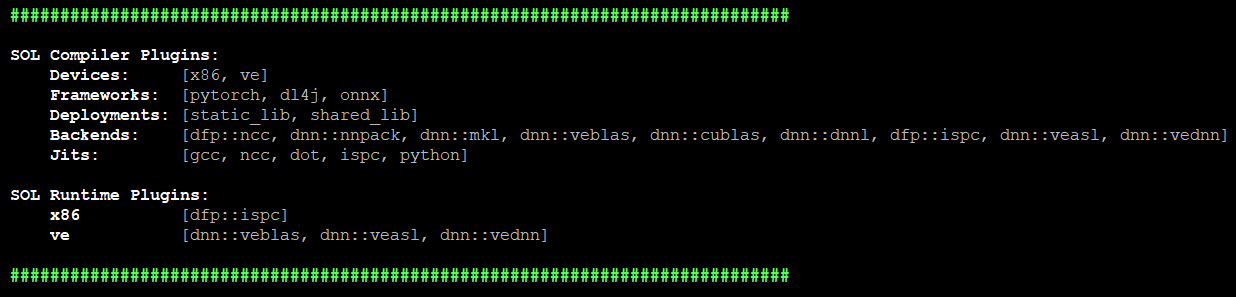

sol.plugins() |

Prints overview of loaded plugins.  |

sol.seed(deviceType=None, deviceIdx=None) |

Fetches the global seed (both == None), the device type’s seed or the seed of a specific device. |

sol.set_seed(seed, deviceType=None, deviceIdx=None) |

Sets the seed. |

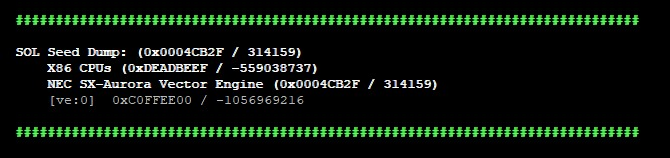

sol.seeds() |

Prints seed overview:  |

For offloading the data needs to be on the host system, otherwise implicit copy is not possible!

Variable Dimensions

Since SOL v0.5 we support variable dimensions (VDims). When you run

sol.optimize, SOL will automatically detect variable dimensions. The

acceptable input shapes get printed by SOL after analyzing the model.

Inputs: in_0 [#0, 5, #1, #2, #3]

Outputs: out_0 {

"A": [#0, 5, #1, #2, #3],

"B": [#0, 5, #1, 3, 3],

"C": [#0, 5, #1, 5, 7],

}

Here we see that we have 4 VDims (#0 to #3). Depending on the

structure of your neural network, SOL will restrict certain dimensions to be of

fixed size. For performance reasons, SOL disables all VDims, so you need to

enable them by hand using:

sol_model = sol.optimize(model, [torch.rand(5, 5, 5, 5, 5)], vdims=[True, False, 1])

This enables the #0 to accept any size. #1 will only accept the size

that was used when parsing the model (the default behavior). #2 will only

accept the size to be 1. As #3 does not get set, it will use the default

behavior. So the compatible shape for this example is [*, 5, 5, 1, 5].

Setting the VDims needs to happen BEFORE you execute the model for the first

time, otherwise SOL will not obey your settings! If you need to change your

VDims call sol.cache.clear() at the beginning of your script.